Authors: Macks Tam, Spencer Tam, and Tian Xia

💻 Source Code: Web and VR, Toio

Design Summary

For our co-located/remote communication/interaction project, we decided to choose a unique path, human-pet interaction. Specifically, we want to help owners and pets connect when they are separated for a long period of time, such as during business trips or vacations, where the pet cannot be brought along. After going through many ideas, we decided to make a video messaging app that enables asynchronous video message exchange, haptic feedback matching the senders’ gestures, and video viewing in virtual reality.

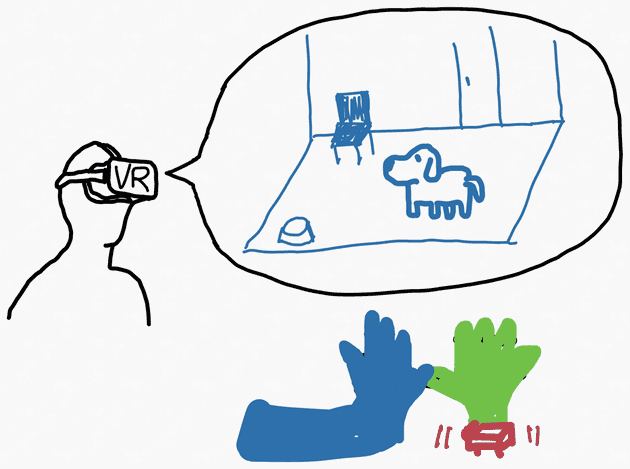

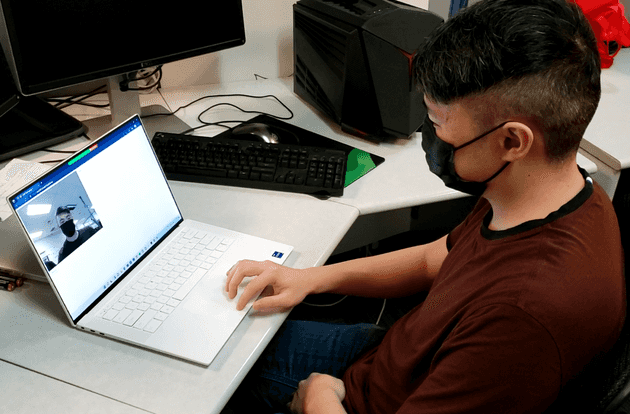

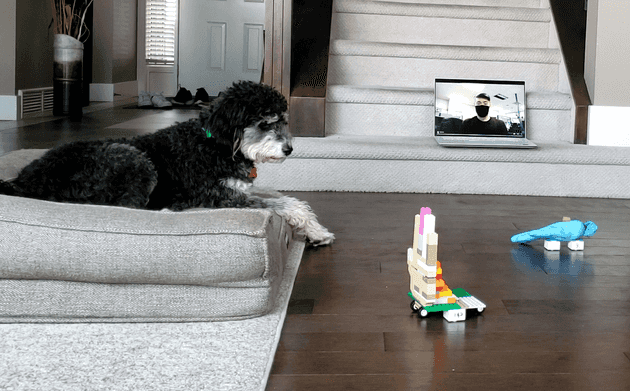

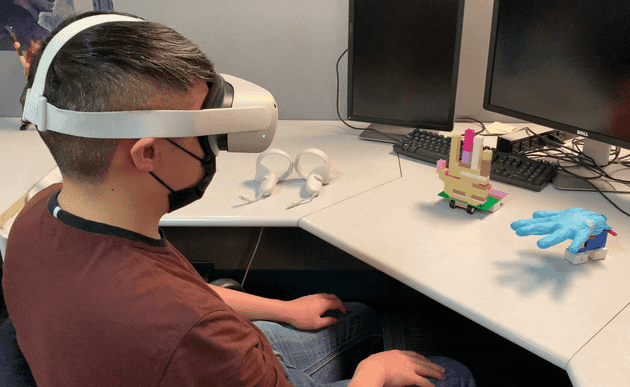

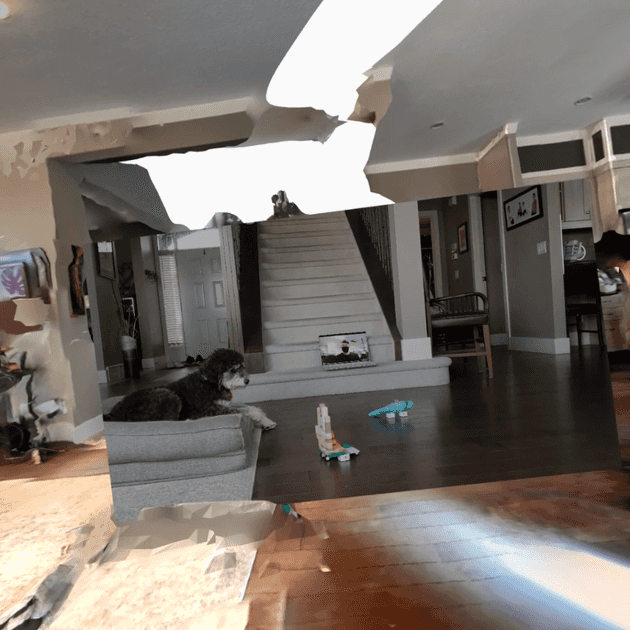

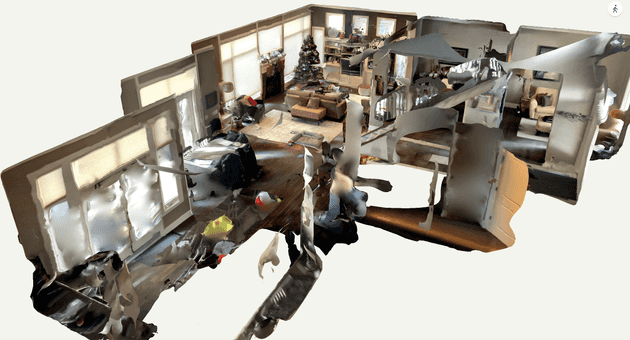

In PetPawty, the pet owners can send video messages to their pets (See Figure 1). With the help of a pet sitter, the pets can watch the received video messages while experiencing haptic feedback powered by Toio and based on the gestures made by the pet owners in the videos (See Figure 2). In addition, the pets can also send video messages back to their owners with the help of a pet sitter (See Figure 3). The pet owners can watch the received videos in conventional desktop mode and immersive VR mode while experiencing haptic feedback powered by Toio and based on the gestures made by their pets in the videos (See Figure 4). In the VR mode, the videos are embedded in 3D reconstructed scenes and at locations where the recorded events happened in the real-life counterparts of such 3D reconstructed scenes (See Figure 6). In this demo, the videos are embeded in front of the stairs because the recordings took place there (See Figure 5).

Figure 1: The user sends a video message to his pet.

Figure 1: The user sends a video message to his pet.

Figure 2: The pet watches the video from his owner with Toio-powered haptic feedback.

Figure 2: The pet watches the video from his owner with Toio-powered haptic feedback.

Figure 3: The pet sends a video message to her owner (with the help of her pet sitter).

Figure 3: The pet sends a video message to her owner (with the help of her pet sitter).

Figure 4: The user watches the video from his pet in VR with Toio-powered haptic feedback.

Figure 4: The user watches the video from his pet in VR with Toio-powered haptic feedback.

Figure 5: The video is embedded in a 3D reconstructed scene of the user’s home.

Figure 5: The video is embedded in a 3D reconstructed scene of the user’s home.

Figure 6: 3D Reconstructed Scene from iPad Pro’s LiDAR camera via Polycam

Figure 6: 3D Reconstructed Scene from iPad Pro’s LiDAR camera via Polycam

Design Process

Our design process started with 10 preliminary design sketches from each of the three team members. We then chose Macks’ idea of human-pet interaction as the base design to develop 10 variants from each member. We later decided to combine several design variants for human-pet interaction as the final design decision. Here we show our selected 10 base ideas, 10 refinement ideas, and a story board concept video.

Story Board Concept Video

Preliminary Design

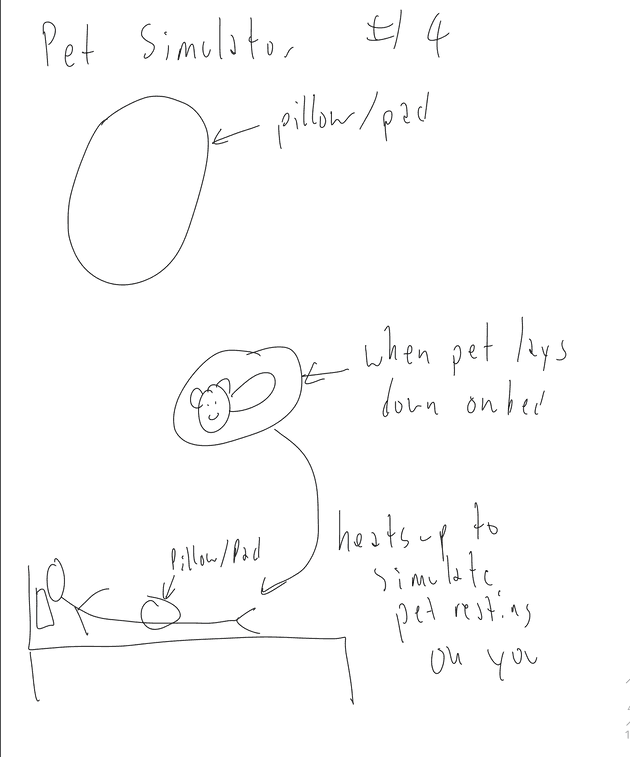

Final Base Design - Pet Simulator (Macks)

This Idea is what we ended up basing our refinements around. Essentially the user would have a pillow that is linked to their pet’s bed. If the pet lays down in the bed, the user’s pillow would heat up slightly to simulate the pet sleeping on the user. This would help users that are out of town or away from their pets to have a somewhat simulation of their pet still being there with them. We ended up using this as inspiration for our refinements and general direction.

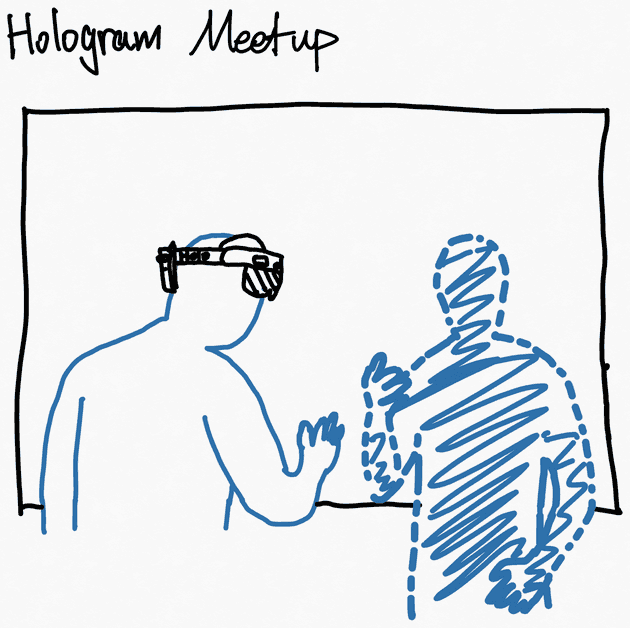

Hologram meetup (Tian)

This idea intends to increase the sense of co-presence between remote communicators by enabling users to see others’ holograms while wearing AR headsets. All the participants’ behaviours and voices are synced with their holograms. This idea requires a sophisticated setup of tracking and scanning with RGBD cameras and AR headsets, which cannot be fully implemented given our remaining time for the project. However, our final idea does leverage the use of an immersive environment.

Remote High Five (Tian)

This idea aims to solve the lack of physical touch during remote communication. A Toio-actuated hand model can be triggered to touch the user’s hand during a remote conversation to simulate the high-five gestures. This idea is eventually integrated into our final design and system implementation.

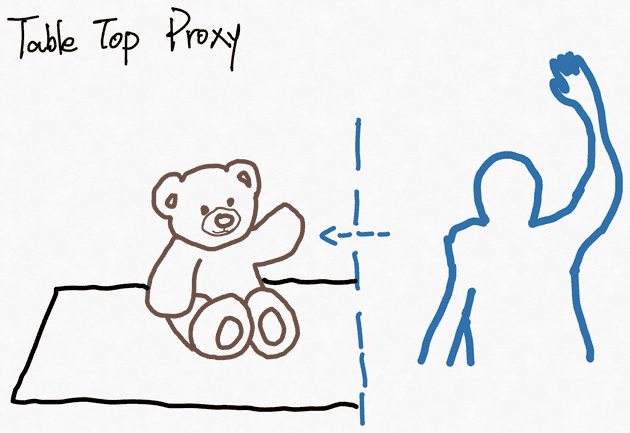

Table-Top Proxy (Tian)

This design leverages a toy bear as the proxy of a remote communicator. The bodily movements of the remote communicator are synced to the toy bear, such as synchronous hand waving. We recognize the number of possible synchronous bodily movements can be limited mainly around the movement of arms, so we did not proceed with this idea because we wanted to explore designs with more interaction techniques.

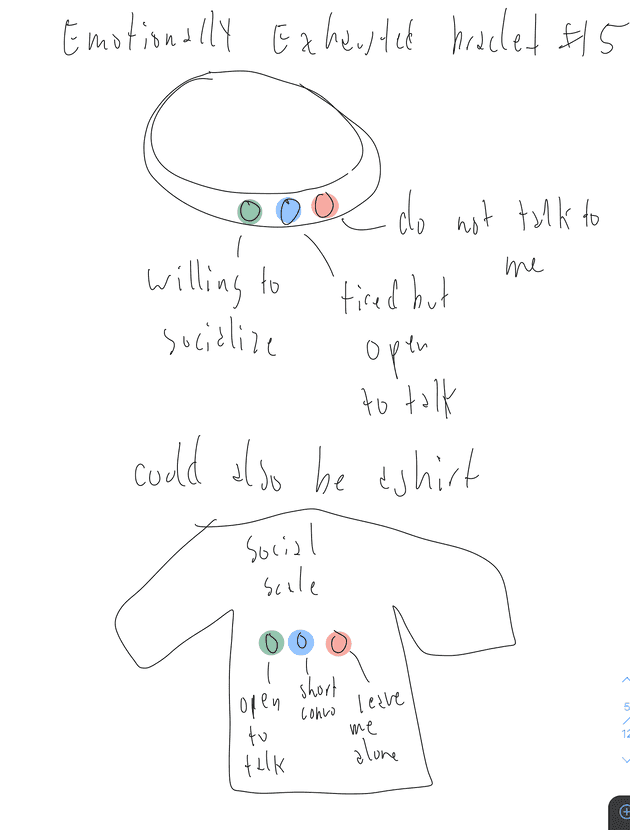

Social Measure Bracelet (Macks)

This idea was to have a bracelet that had three lights on it. Green is to indicate others that the user is open to socializing and is full of energy. Yellow is to symbolize they are open to talking but are exhausted and may not wish to talk for a long time. Finally, red light is to say the user does not want to speak with others right now and needs space. This bracelet would help users show their social desire at a given time. We felt this was too simple for a final project and wanted to go in a more unique direction, so we did not go with this idea.

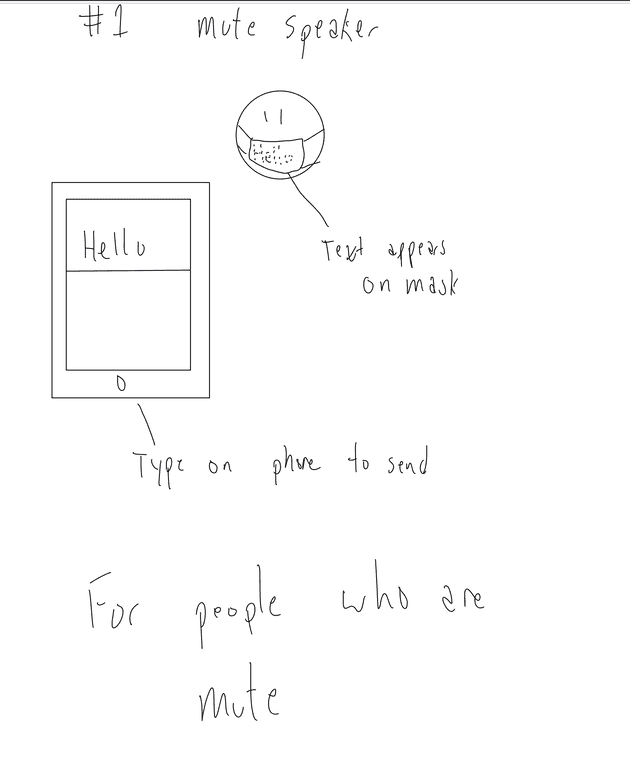

Mute Communication (Macks)

This idea was based on allowing for easier time for mute people to speak. It would be a mask that displays texts the user writes on their phone. This way, rather than having to stare at the user’s phone when speaking to them, you can look at the texts on the mask as it is being written, which makes the conversation more normal. We struggled with the electronics of the mask idea and thus decided we could not implement it with our current experience and time remaining.

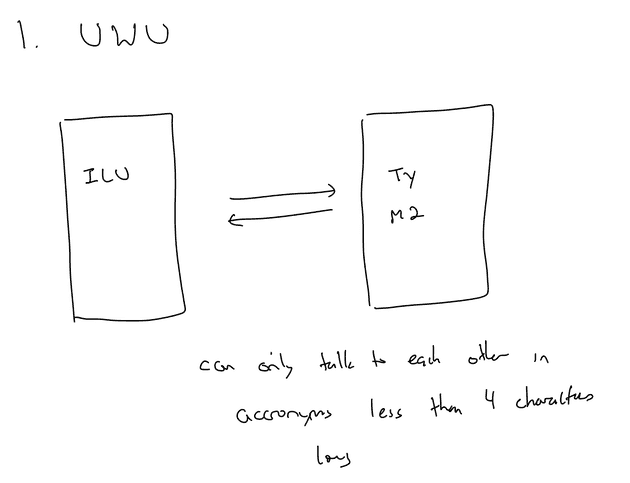

UWU Chat (Spencer)

This idea is for those who use shorthands to text, and the users would only be allowed to communicate in shorthand with messages like “brb”, “omg”, “btw”, etc. We decided not to go with this idea, as while it would be simple to implement, we felt that there could be more creativity behind our ideas. We also felt that the user experience would not be that good, as writing longer sentences like “WCPGW”, a shorthand for “What Could Possibly Go Wrong”, could end up becoming confusing.

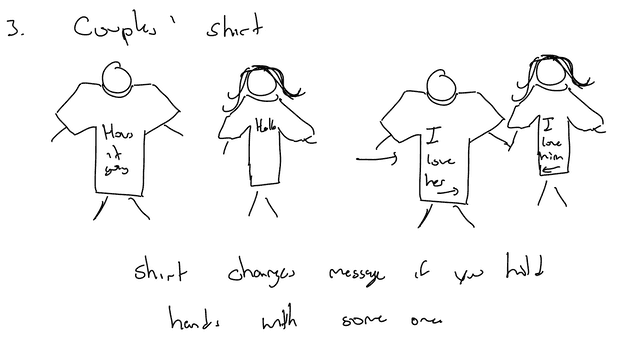

Couple’s Shirt (Spencer)

This idea is based around couples wearing the same shirt. The basic idea is if the users were separate from each other, then the shirts would display a normal message or logo; however, once the users are within a certain distance to each other, then the shirts’ display would change to something that compliments each other, such as two halves of a heart, with arrows pointing at each other. We decided not to go with this idea, as while the idea was very creative, we could not implement it with the time we had left.

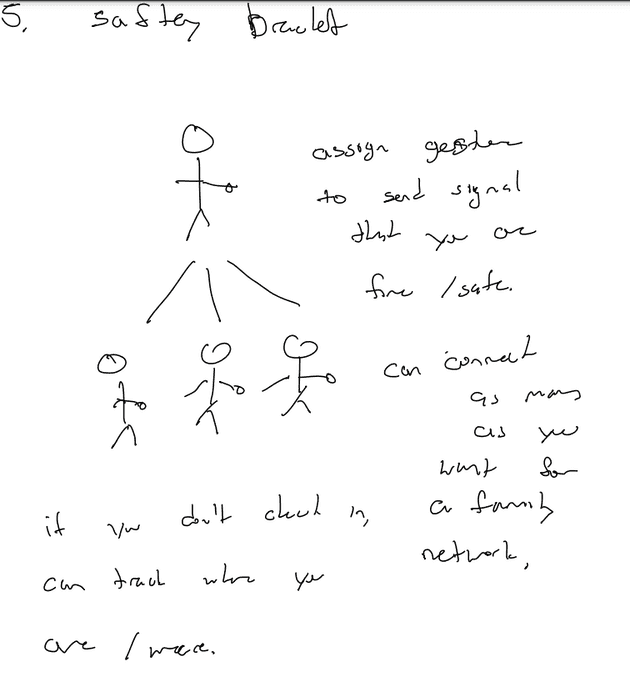

Safety Bracelets (Spencer)

This idea was based around the fact that people worry about their friends’ and family’s safety. The bracelet would basically be around the users’ family members’ wrists, and if they felt that they were in danger, then they could do a specific gesture to send a message to other bracelets within their group, such as family and friends. It would then send their location to your phone every couple of seconds to ensure you can find them and check if they are safe. The users can also do other gestures to send others a signal to say they are safe. While this idea would be interesting to implement, we felt that the location tracking might turn away some users, as it would be an invasion of privacy.

Heated Exchange (Spencer)

This idea is based around the idea that most couples get each other some type of matching clothing, especially around the winter holidays. So, couples with matching sets could allow for mutual heating if they were both wearing a piece of matching clothing at the same time. The clothing could be scarfs, mittens, hats, etc. While this idea would promote social interactions between couples, we felt that the wiring to do the heating and the sensors to detect if they were both wearing would be too hard to implement in the time we had left. It is also a safety challenge given our expertise to put so many wires around the head and/or body.

Design Refinement

Final Design of the VR Component - VR and Toio Haptics

This idea is a combination of our several ideas, including bidirectional Toio haptic feedback, Toio high five, and first- and third-person VR chat. This is the idea we ended up proceeding with and implementing as the VR Component of the final system.

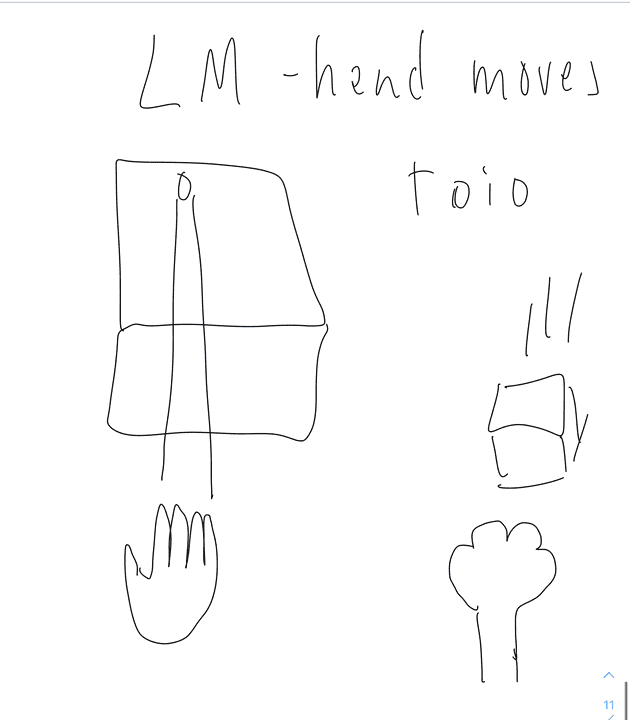

Toio Movement for Dogs and Users

This refinement was to focus on our Toio-powered interactions. We initially planned to have Toios to move to simulate the pet’s paw for the user and also wanted to help the pets have some sort of user simulation as well. Thus the idea of having a Toio for the dog to give paws to was what this sketch attempts to portray. We ended up going with this idea as part of the final design.

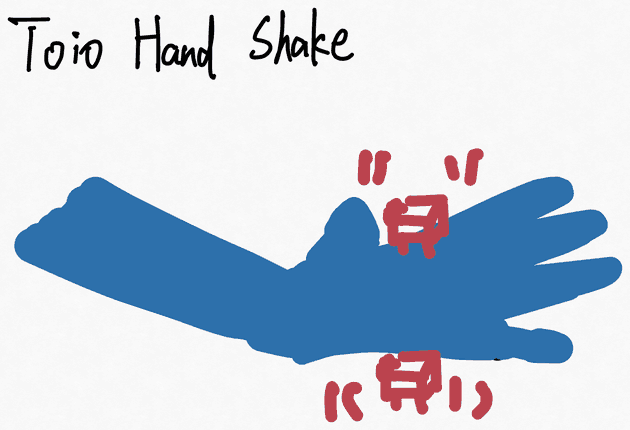

Toio Hand Shake

Similar to remote high-five, this design enables the users to get the physical touch of their pets’ hand shakes. The wiggling movements of Toios simulate the touch of the pets’ paws in the users’ hands. This design is eventually integrated into our final design as a system component that provides haptic feedback to the users simulating pets putting their paws into their human friends’ hands.

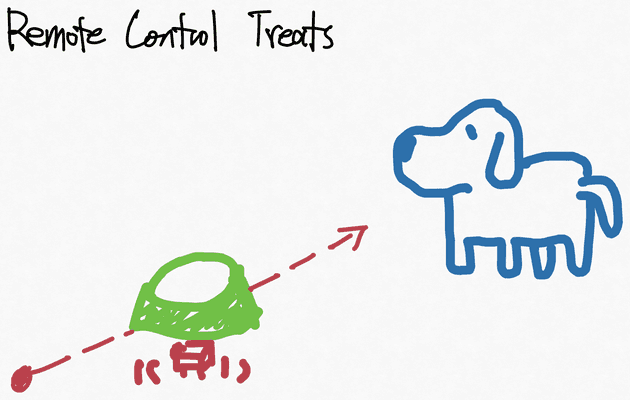

Remote-Controlled treats

This design enables the user to teleoperate ground robotics to move a bowl of treats for the pets. Pet owners who are travelling can use this system to continue bonding with their pets and dispensing their pets’ favourite treats. This design is eventually integrated into our final design as the proxy hands interacting with pets.

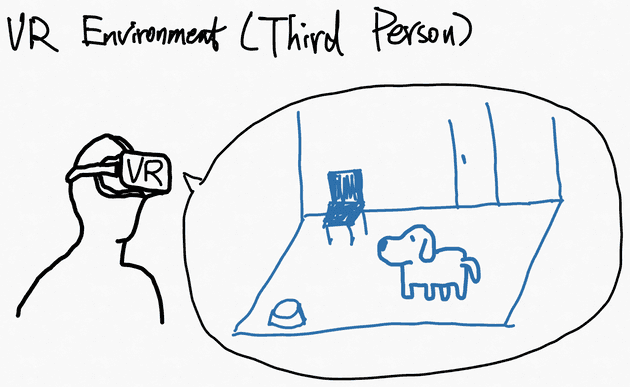

VR Chat (Third-Person)

This design variant enables the users to see their pets’ surroundings and environment, such as the home. A VR scene of the home can also increase users’ sense of belonging with virtual immersions of their familiar environments. This design is integrated into our final design, which leverages a reconstructed scene of the user’s home and a video of the pet from a third-person perspective.

VR Chat (First-Person)

This design aims to enable the users to chat with their pets in VR as if they meet face to face. With VR, the faces of the pets can be viewed as if they are in close proximity with their owners. This design is integrated into our final design as a feature enabling the users to see videos of their pets from a first-person perspective.

Covid Necklace

This idea was created to try and focus on our human-pet communication idea. This would be a necklace with the human’s covid immunization status. If the user wants to enter the park with their pet, the necklace would have to be worn and show green. Green would represent the owner has had all their shots and the dog is safe. If the necklace is red, the user has not gotten all their shots and thus would be denied entry at the park. We felt this was not a desirable direction we wanted to go and thus decided against this idea.

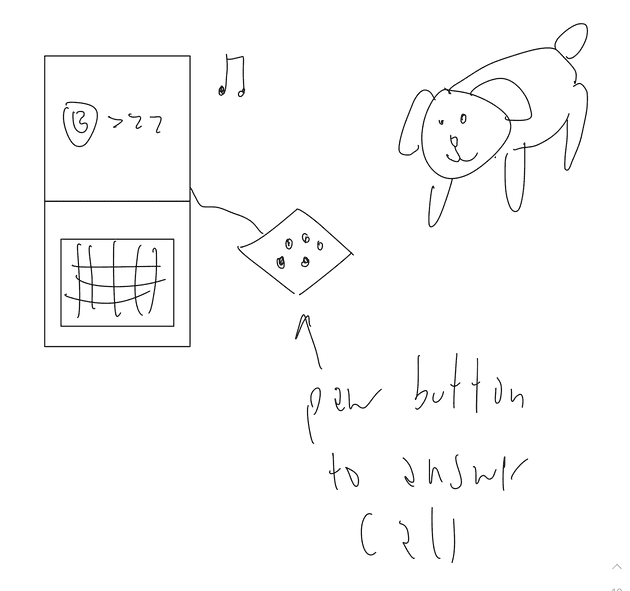

Paw Activated Computer

This idea was to help a dog turn on a computer to enter a zoom call with the user. This could work either as a button that can be pressed to accept a call from the user, or a button to turn the computer on automatically and boot up the call right away. We wanted to somehow connect the dog and human in a simple way for the dog. We ended up realizing we would need to train the dog to understand how the button works, and keeping the computers on like this would cost the users. Thus we decided not to proceed with this idea.

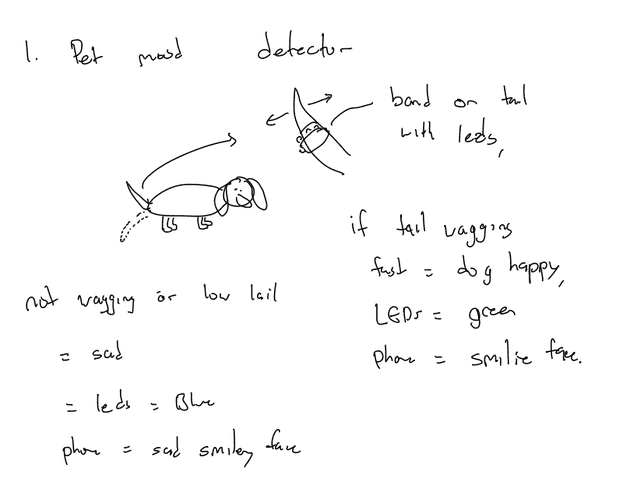

Pet Mood Detector

This design leverages a proximity sensor and is thought of to keep the sleeping user’s items safe from thieves. The user would place it on a table, and it would scan the area and if anyone entered the zone, it would play an alarm to wake up the user. This would allow the users to sleep while ensuring the safety of their items, as at U of C, some people have been stolen from while sleeping. We decided not to proceed with this idea because it would be difficult to control the scanner given the time we have left, as the user would not be included in the scan.

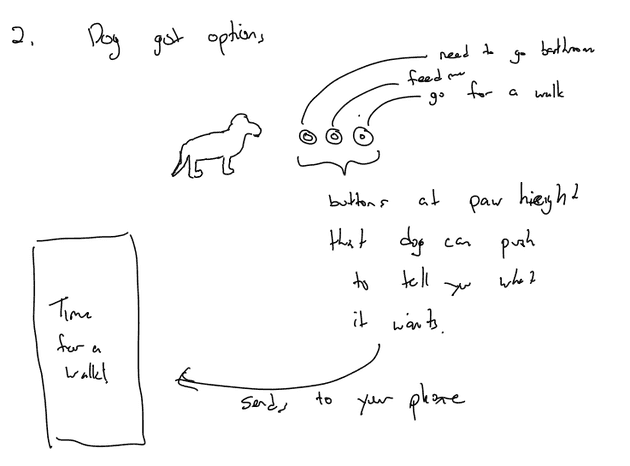

Dog Options

This refinement was based on human to pet interaction and centred around the idea of having buttons in the pet’s area that allows it to alert the user about what it needs. For the basis of this idea, we have 3 buttons, one to alert the owner that the pet is hungry, another to alert the owner that it wants to go to the bathroom, and lastly, one that alerts the owner that it is time for a walk. While we liked this idea, it would take a lot of training in order for the pet to be able to know which button to press to get a certain result, and due to time limitations, and the fact that none of us owns a dog (that we could train easily), we did not go with this idea.

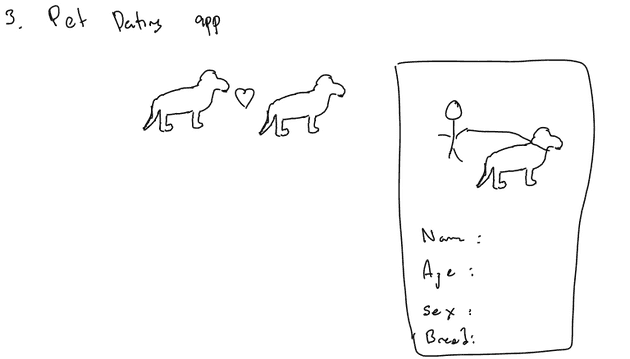

Moonlight Walks

This idea is for pet to pet communication, and based around the idea of a dating app for pets. The users could register their pets on the app with name, sex, breed, and age, along with some likes and hobbies. Then owners can swipe left or right, like Tinder based on what they think their pet would like as a mate. This idea was cool, but we decided not to go with the idea, as we wanted to focus more on human to pet communication rather than pet to pet. Also, after doing a little bit of research, most pets these days are neutered to prevent them from getting any diseases, which makes this idea unrealistic.

Project Contribution

- Sketches: Our design process started with 10 preliminary design sketches from each of the three team members. We then chose Macks’ idea of human-pet interaction as the base design to develop 10 variants from each member. We later decided to combine several design variants for human-pet interaction as the final design decision for a story board concept video, system implementation, and a demo video.

- Story Board Concept Video: We brainstormed and filmed our story board concept video together; Spencer moved the props, while Tian filmed the video, and Macks acted as the user. Macks also recorded a voice-over describing our project design. Tian edited the video and uploaded it to YouTube.

- Implementation: Macks and Spencer collaboratively worked on the desktop version of PetPawty. Tian worked on the program’s VR version and Toio control.

- Demo: We brainstormed and filmed our demo video together; Spencer recorded the video, while Tian controlled the Toios, and Macks acted as the user. Macks recorded a voice-over describing our project design. Tian edited the video and uploaded it to YouTube.

References

- [Code] Course instructor’s code sample on Toio control.

- [Code] Course instructor’s code samples on web development and video recordings via webcams.

- [Doc] Docs of HTML, CSS, and JavaScript from MDN and W3School.

- [Doc] VR component adapted from the Vimeo A-Frame component examples from the Getting Started guide and its full documentation.